![]() Recommendation letters are one of the most face valid predictors of academic and job performance; it is certainly intuitive that someone writing about someone else whom they know well should be able to provide an honest and objective assessment of that person’s capabilities. But despite their ubiquity, little research is available on the actual validity of recommendation letters in predicting academic and job performance. They look like they predict performance; but do they really?

Recommendation letters are one of the most face valid predictors of academic and job performance; it is certainly intuitive that someone writing about someone else whom they know well should be able to provide an honest and objective assessment of that person’s capabilities. But despite their ubiquity, little research is available on the actual validity of recommendation letters in predicting academic and job performance. They look like they predict performance; but do they really?

There is certainly reason to be concerned. Of the small research literature available on recommendation letters, the results don’t look good. Selection of writers is biased; usually, we don’t ask people who hate us to write letters for us. Writers themselves are then biased; many won’t agree to write recommendation letters if the only letter they could write would be a weak one. Among those that do write letters, the personality of the letter-writer may play a more major role in the content than the ability level of the recommendee. So given all that, are they still worth considering?

In a recent issue of the International Journal of Selection and Assessment, Kuncel, Kochevar and Ones1 examine the predictive value of recommendation letters for college and graduate school admissions, both in terms of raw relationships with various outcomes of interest and incrementally beyond standardized test scores and GPA. The short answer: letters do weakly predict outcomes, but generally don’t add much beyond test scores and GPA. For graduate students, the outcome for which letters do add some incremental predictive value is degree attainment (which the researchers argue is a more motivation-oriented outcome than either test scores or GPA) – but even then, not by much.

Kuncel and colleagues came to this conclusion by conducting a meta-analysis of the existing literature on recommendation letters, which unfortunately was not terribly extensive. The largest number of studies appearing in any particular analysis was 16 – most analyses only summarized 5 or 6 studies. Thus the confidence intervals surrounding their estimates are likely quite wide, leaving a lot of uncertainty in the precise estimates they identified. That doesn’t necessarily threaten the validity of their conclusions – since these are certainly the best estimates of recommendation letter validity that are available right now – but it does highlight the somewhat desperate need for more research in this area.

Another caveat to these findings – the studies included in any meta-analysis must have reported enough information to obtain correlation estimates of the relationships of interest. In this case, that means the included studies needed to have quantified recommendation letter quality. I suspect many people reading recommendation letters instead interpret those letters holistically – for example, reading the entire letter and forming a general judgment about how strong it was. That holistic judgment is probably then combined with other holistic judgments to make an actual selection decision. Given what we know about statistical versus holistic combination (i.e., there is basically no good reason to use holistic combination), any particular incremental value gained by using recommendation letters may be lost in such very human, very flawed judgments.

So the conclusion? At the very least, it doesn’t look like using recommendation letters hurts the validity of selection. If you want to use such letters, you will likely get the most impact by coming up with a reasonable numerical scale (e.g. 1 to 10) and assign each letter you receive a value on your scale to indicate how strong the endorsement is. Then calculate the mean of that number alongside the other components of your statistically derived selection system (e.g. GPA and standardized test scores).

- Kuncel, N. R., Kochevar, R. J., & Ones, D. S. (2014). A meta-analysis of letters of recommendation in college and graduate admissions: Reasons for hope International Journal of Selection and Assessment, 22 (1), 101-107 : 10.1111/ijsa.12060 [↩]

A report from the National Science Foundation recently stated that a majority of young people believe astrology to be scientific, as reported by Science News, Mother Jones, UPI, and Slashdot, among others. Troubling if true, but I believe this to be a faulty interpretation of the NSF report. And I have human subjects data to support this argument.

What the NSF actually did was ask the question, “Is astrology scientific?” to a wide variety of Americans. The problem with human subjects data – as any psychologist like myself will tell you – is that simply asking someone a question rarely gives you the information that you think it does. When you ask someone to respond to a question, it must pass through a variety of mental filters, and these filters often cause people’s answers to differ from reality. Some of these processes are conscious and others are not. This is one of the reasons why personality tests are criticized (both fairly and unfairly) as valid ways to capture human personality – people are notoriously terrible at assessing themselves objectively.

Learning, and by extension knowledge, are no different. People don’t always know what they know. And this NSF report is a fantastic example of this in action. The goal of the NSF researchers was to assess, “Do US citizens believe astrology is scientific?” People were troubled that young people now apparently believe astrology is more scientific than in the past. But this interpretation unwisely assumes that people accurately interpret the word astrology. It assumes that they know what astrology is and recognize that they know it in order to respond authentically. Let me explain why this is an important distinction with an anecdote.

It wasn’t until around my sophomore year of college that I discovered the word “astrology” referred to horoscopes, star-reading, and other pseudo-scientific nonsense. I had heard of horoscopes before, sure, but not the term astrology. I had, as many Americans do, a very poor working vocabulary to describe scientific areas of study. Before that point, in my mind, astrology and astronomy were the same term.

I did not, however, think that horoscopes were scientific. I simply did not know that there was a word for people who “study” horoscopes. If you’d asked me if astrology was scientific before college, I would have said yes – because to me, astrology was the study of the stars and planets, their rotations, their composition, the organization of outer space, and so on. Of course, in reality, it isn’t. Astronomy is a science. Astrology is the art of unlicensed psychological therapy.

When I saw the NSF report, I was reminded of my own poor understanding of these terms. “Surely,” I said to myself, “it’s not that Americans believe astrology is scientific. Instead, they must be confusing astronomy with astrology, like I did those many years ago.” Fortunately, I had a very quick way to answer this question: Amazon Mechanical Turk (MTurk).

MTurk is a fantastic tool available to quickly collect human subjects data. It pulls from a massive group of people looking to complete small tasks for small amounts of money. So for 5 cents per survey, I collected 100 responses to a short survey from American MTurk Workers. It asked only 3 questions:

- Please define astrology in 25 words or less.

- Do you believe astrology to be scientific? (using the same scale as the NSF study)

- What is your highest level of education completed? (using the same scale as the NSF study)

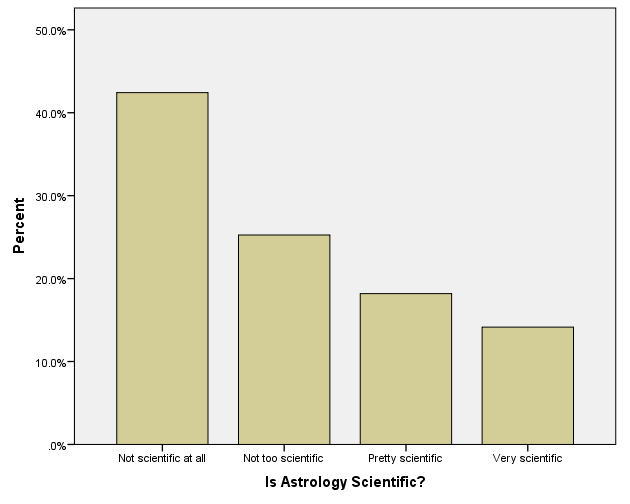

After getting 100 responses (a $5 study!), I first sorted through the data to eliminate 1 bad case from someone who entered gibberish when responding to the first question. Then I tried to replicate the findings from the NSF study by looking at a bar chart of #2 for the remaining 99 people. It was very similar to what NSF reported, as shown below.

Across the sample, approximately 30% found astrology to be “pretty scientific” or “very scientific.” This is lower than the NSF report found (42% for “all Americans”), but this is probably due to the biases introduced by MTurk in comparison to a probability sample of US residents – MTurk users tend to be a little more educated and a bit older. Still a pretty high proportion though.

Next, I went through and coded the text responses to identify who correctly differentiated between astrology and astronomy. 24% of my sample (24 of 99 people) answered this question incorrectly. And given the biases of MTurk, I suspect this percentage is higher among Americans in general. Some sample incorrect responses:

- Astrology is the study of the stars and outer space.

- Astrology is the study of galaxies, stars and their movements.

- the study of how the stars and solar system works.

- Astrology is the scientific study of stars and other celestial bodies.

These are in stark contrast to the correct responses:

- Trying to determine fate or events from the position of the stars and planets

- Astrology is the prediction of the future. It is predicted through astrological signs which are influenced by the sun and moon.

- Astrology is the study of how the positions of the planets affect people born at certain times of the year.

- The study the heavens for finding answers to life questions

For those statistically inclined, a one-sample t-test confirms what I suspected: if people generally did not have trouble distinguishing between astrology and astronomy, we would not have seen such an extreme number of incorrect answers: t(98) = 17.032, p < .001. There is definitely some confusion between these terms.

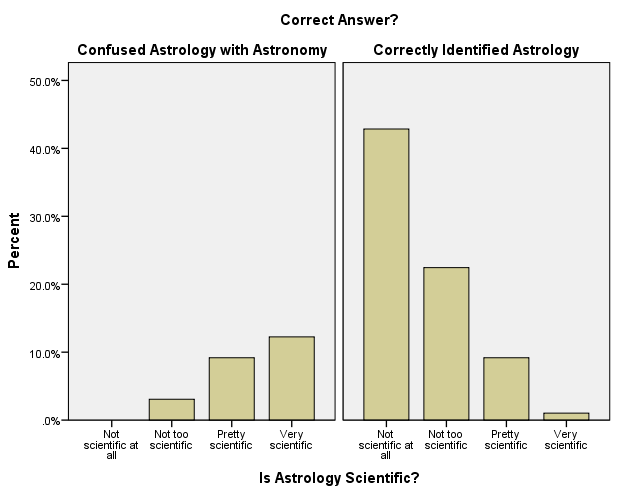

Next, I created the bar graph above again, but split it by whether or not people got the answer correct.

Quite a big difference! Among those that correctly identified astrology as astrology, only 13.5% found it “pretty scientific” or “very scientific”. Only 1 person said it was “very scientific.” Among those that identified astrology as astronomy, the field was overwhelmingly seen as scientific, exactly as I expected. This is the true driver of the NSF report findings. Both an independent-samples t-test and a Mann Whitney U test (depending on what scale of measurement you think Likert-type scales are) agree that the differences in science perceptions between those responding about astronomy and those responding about astrology is significantly different (U = 119.00, p < .001; t(97) = 10.537, p < .001). Massive effect too (d = 2.48)! Thus I conclude that it is invalid to simply ask people about astrology and assume that they know what that term means.

Various media reports have noted that the NSF report discussed how 80-99% of Chinese respondents in a 2010 Chinese study reported skepticism of various aspects of astrology, interpreting this difference as evidence supporting the decline of US science education. Instead, I suspect that this difference only reveals that in Chinese, the words for astronomy and astrology are not very similar. 86.5% is right in the middle of the range of findings from the Chinese sample, although I expect a broader US sample than MTurk would probably be a little lower. But at the least, I feel comfortable concluding that we are safe from Chinese scientific dominance for at least another year.

So why might this effect have been worse for young people? My guess is that the long-term effect is exactly the opposite from what the NSF reports. I suspect that young people are in fact more skeptical of astrology than ever before – and I believe this skepticism is driven by reduced exposure in youth. Young people just aren’t as likely to hear the word astrology in connection to horoscopes anymore, and probably less likely to hear about horoscopes at all because virtually no one reads newspapers anymore (which I also suspect is where most people were historically first exposed to them; I remember learning of horoscopes for the first time in the Tennessean many years ago as a child in Nashville). As people age, they’re more likely to hear the term “astrology” and say, “Oh, astrology means horoscopes? That’s obviously fake! Nothing like astronomy!” Perhaps the demise of print journalism isn’t as bad as we thought!

If you’d like to take a look at my data from MTurk, it is available to download here. Overall, I think the lesson from this is quite clear: more NSF funding for social scientists to prevent these problems in the future!!

Update 2/18: Thanks to @paldhaus and @ChrisKrupiarz, I discovered a European Commission report corroborating my findings, available here (see p. 35-36). In the Commission survey of the EU, a “split ballot” approach was used, asking half of respondents about how scientific “horoscopes” are whereas the other half were asked about how scientific astrology was. 41% of those in the EU identified astrology as scientific, whereas only 13% identified horoscopes as scientific. Since this was a 2005 study, it it surprising NSF has not altered their methods since.

![]() It’s well-supported in psychology that fluid intelligence (i.e. a person’s ability to solve unique, unfamiliar problems or remember large amounts of unfamiliar information, or otherwise flex their mental muscles) decreases with age. There are several theories as to why – perhaps our brains become less efficient over time as our neurons age, or perhaps we simply exercise our brains less as we get older, resulting in a sort of muscular atrophy). But a new paper by Ramscar, Hendrix, Shaoul, Milin and Baayen1 in Topics in Cognitive Science, described in an article published by the New York Times, provides a new theory: as our brains store more information, it may become more difficult to retrieve memories. This contention is based on information theory, which explores how information processing occurs in computing systems (e.g., what is the most efficient way to seek specific information given a database of general information?). As we get older, the theory states, we have more memories to sort through, which causes us to perform more poorly on cognitive measures.

It’s well-supported in psychology that fluid intelligence (i.e. a person’s ability to solve unique, unfamiliar problems or remember large amounts of unfamiliar information, or otherwise flex their mental muscles) decreases with age. There are several theories as to why – perhaps our brains become less efficient over time as our neurons age, or perhaps we simply exercise our brains less as we get older, resulting in a sort of muscular atrophy). But a new paper by Ramscar, Hendrix, Shaoul, Milin and Baayen1 in Topics in Cognitive Science, described in an article published by the New York Times, provides a new theory: as our brains store more information, it may become more difficult to retrieve memories. This contention is based on information theory, which explores how information processing occurs in computing systems (e.g., what is the most efficient way to seek specific information given a database of general information?). As we get older, the theory states, we have more memories to sort through, which causes us to perform more poorly on cognitive measures.

Several statements in the New York Times article on this topic intrigued me.

- The title: “The Older Mind May Just Be a Fuller Mind.”

- A notable assertion: “The new report will very likely add to a growing skepticism about how steep age-related decline really is. It goes without saying that many people remain disarmingly razor-witted well into their 90s; yet doubts about the average extent of the decline are rooted not in individual differences but in study methodology.”

- The conclusion: “It’s not that you’re slow. It’s that you know so much.”

The title is tentative, the next statement is an anecdote (several people does not a theory prove), and the last is just intended to be memorable. Yet these statements, among others in the article, are provocative, leaving the reader with just enough information in order to maximize the punch of that final pithy conclusion. But is it a valid conclusion?

For that, I needed to turn to the original research article. This was a bit of an exercise, because the article is presumably written with an audience of information scientists in mind, and I am not an information scientist. But from my reading, I believe that I was able to decode what they did. The basic premise was that the researchers conducted a series of simulations (i.e. not using human participants) on developed vocabulary. In the first simulation, the researchers constructed a database of words of varying complexity. They then selected words based upon a simulated 20 people learning words over their lifespans, plotting the number of words learned at each age.

This is the first peculiar decision in this study if the goal is to generalize to humans, because it assumes that people add new words to their vocabulary at a predictable, curved rate. In one illustrative graph (p. 10), the mean vocabulary across the 20 individuals was plotted as a function of age – however, age was defined as the number of words that a person had been exposed to. The valid conclusion from this figure appears to be that exposure to a greater number of words leads to a larger vocabulary. So the actual finding is not surprising, but the use of the word “age” to describe the effect is a bit strange. For this to be a valid in describing age, our reading habits would need to be something like: I read random books with random words in them and am exposed to new words on a regular basis. I suspect reality is not quite so random.

The researchers next made some assumptions about how many words people were exposed to and the growth rates of vocabulary. They assumed that adults read 85 words/min, 45 min/day, 100 days/year. They also assumed that adults encountered new words not in their vocabulary at the rates established in their prior simulation. Given those assumptions, they created algorithms to read from lists of words at that rate for the equivalent of a 21-year old (1,500,000 words) and a 70-year old (29,000,000 words). They then simulated how long (relative to each other) retrieving words would be from each of those lists, finding that the 70-year old would take longer to browse through her memory than the 21-year old would.

These do not seem like wise assumptions to me. I would hazard a guess that I learn a handful of new words in any given year, and my reading is also restricted to things I’m interested in on the Internet (where the overall reading level is not terribly high) and in psychology journals. I strongly doubt people reading newspapers and magazines in their free time (the primary sources of reading for our current seniors) encountered new words at a predictable, consistent rate – and I would guess that the new word encounter rate of a non-academic is somewhat lower than mine. More accessible metrics (e.g. new words/year) are not presented in the article, so I don’t have any way to convert the numbers reported in the article into more understandable metrics. Again, this assumes that people read random sources with random words in them, constantly exposing themselves to new vocabulary, regardless of age. It also assumes that the brain works perfectly like a computer algorithm; yet we know that the human brain relies heavily on heuristics/shortcuts and makes a lot of mistakes. This does not match up the researchers’ model.

There were also a few writing peculiarities. Take this, from p. 16:

To ensure older adults’ greater sensitivity to low-frequency words was not specific to this particular data set, a second empirical set of data was analyzed in the same way (Yap, Balota, Sibley, & Ratcliff, 2011; Fig. 4). All of the effects reported in the first analysis replicated successfully.

This is the entire discussion of this apparently independent follow-up study – no explaining anything they did in that follow-up study, no reporting of any statistics actually conducting the “comparisons”, no defining a “successful replication”, and no listing the effects that they tested. The entire “methods” and “results” sections associated with this statement were in fact a single figure. Maybe that’s normal in cognitive science, but it would never fly in a decent psychology journal. We have no way to know what they did, why they did it, what was replicated, what went wrong, etc. The ability to replicate from a write-up is absolutely required for good science, and this element is missing from this part of the paper. That’s not catastrophic – since this replication isn’t really necessary to make the point they were making – but it adds yet another “that’s weird” to my tally as I read through.

So despite being based upon faulty assumptions about human learning as well as being limited entirely to the growth of vocabulary over the life-span, the second simulation described above seems to be the basis for the NYT article conclusion that memory in general does not decline with age. This conclusion is not justified. Contrary to the NYT statement, I am no more skeptical of age-related decline than I was before reading either the NYT piece or the original research article.

To me, both the NYT and original article demonstrate only one thing clearly: when applying information processing theory to understanding human cognition, one must be careful to remember the “human”.

- Ramscar, M., Hendrix, P., Shaoul, C., Milin, P., & Baayen, H. (2014). The myth of cognitive decline: Non-linear dynamics of lifelong learning Topics in Cognitive Science, 6, 5-42 : 10.1111/tops.12078 [↩]