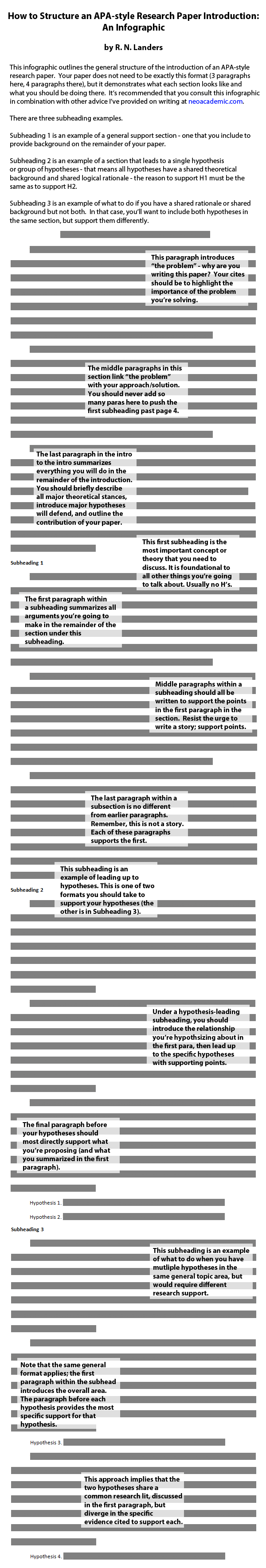

Writing APA-style papers is a tricky business. So to complement my discussion of writing publishable scientific articles, I’ve created an infographic showing some of the major ideas you should consider when writing the introduction to an APA-style research paper. This approach will work well in most social scientific fields, especially Psychology. If you’re writing a short paper, you might only have the first section (the “intro to the intro”) and something like either Subheading 2 or 3. It all depends on the particular research question you’re asking!

The key to writing scientific papers is that you throw out most of what you know about writing stories. Scientific research papers are based upon logical, organized arguments, and scientists expect to see a certain structure of ideas when they read papers. So if you want your paper to be read, you need to meet those expectations.

![]() Many modern organizations try to compete for top talent by adding fancy, interactive experiences to their recruitment process – think of something like a virtual tour. Such interactive experiences are expensive, but their creators hope that they will attract a higher class of recruit. New research from Badger, Kaminsky and Behrend1 in the Journal of Managerial Psychology explores the impact of such media on some recruitment-related outcomes, concluding that highly demanding interactive recruitment activities are likely to harm how well recruits remember important information and do not provide other benefits.

Many modern organizations try to compete for top talent by adding fancy, interactive experiences to their recruitment process – think of something like a virtual tour. Such interactive experiences are expensive, but their creators hope that they will attract a higher class of recruit. New research from Badger, Kaminsky and Behrend1 in the Journal of Managerial Psychology explores the impact of such media on some recruitment-related outcomes, concluding that highly demanding interactive recruitment activities are likely to harm how well recruits remember important information and do not provide other benefits.

To understand why this might be the case, you must first understand that there are two competing arguments about interactive experiences in recruitment. On one hand, media richness theory suggests that richer communication techniques lead to more accurate beliefs and know more about organizations when recruited through richer means. Often, this is done in comparisons of face-to-face recruitment versus computer-mediated; in general, face-to-face is better. If media richness is really the mechanism behind such gains, we would also expected richer media to be richer than other media – for example, 3D virtual worlds should produce better recruitment outcomes than an ordinary website.

On the other hand, cognitive load theory suggests that humans have a finite amount of cognitive resources from which they must draw. If overdrawn – for example by having too many mental demands simultaneously – then we’d expect recruitment outcomes to be worse with richer environments. If cognitive load theory is correct, that same 3D virtual world should produce worse recruitment outcomes than an ordinary website.

The researchers furthermore distinguished between the types of outcomes each theory speaks best to. Media richness theory speaks more to affective reactions – richer media lead to a feeling of greater affiliation and understanding of company culture. Cognitive load theory speaks more to cognitive outcomes – higher loads lead to less information remembered.

To test these ideas, the researchers conducted a quasi-experiment of 471 MTurk workers to experience either a traditional website or an interactive recruitment experience in the 3D virtual world, Second Life.

Using path analysis, the researcher concluded from their quasi-experiment that the richer media environment did indeed reduce how well participants remembered the recruitment message. They also found that this was mediated by the experience of increased cognitive load, supporting cognitive load theory. There was no effect on culture-related information.

Overall, this supports cognitive load theory as the more relevant theory for studying the role of technology in recruitment outcomes, at least in regards to Second Life. Although the experience of Second Life was certainly richer, it was also much more demanding, which overrode any potential benefits. What this study does not speak to are innovative interactive experiences that are a little less demanding – like online recruitment games. Such games, or other such experiences, may strike a better balance between cognitive load and media richness, but are left for future research.

- Badger, J.M., Kaminsky, S.E., & Behrend, T.S. (2014). Media richness and information acquisition in internet recruitment Journal of Managerial Psychology, 29 (7), 866-883 : 10.1108/JMP-05-2012-0155 [↩]

A number of articles have been appearing in my news feeds lately from representatives of Google talking about hiring interviews. Specifically, Mashable picked up a Q&A exchange posted on Quora in which someone asked: “Is there a link between job interview performance and job performance?”

As any industrial/organizational (I/O) psychologist knows, the answer is, “It depends.” However, an “ex-Googler” and author of The Google Resume provided a different perspective: “No one really knows, but it’s very reasonable to assume that there is a link. However, the link might not be as strong as many employers would like to believe.”

The New York Times also recently posted provocatively titled, In Head-Hunting, Big Data May Not Be Such a Big Deal, in which Senior Vice President of People Operations at Google was interviewed about interviews, who noted:

Years ago, we did a study to determine whether anyone at Google is particularly good at hiring. We looked at tens of thousands of interviews, and everyone who had done the interviews and what they scored the candidate, and how that person ultimately performed in their job. We found zero relationship. It’s a complete random mess, except for one guy who was highly predictive because he only interviewed people for a very specialized area, where he happened to be the world’s leading expert.

You might ask from this, quite reasonably, “If the evidence is so strong that interviews are poor, why not get rid of interviews?” My question is different: “Why should we let Google drive hiring practices across industries?”

I/O psychology, if you aren’t familiar with it, involves studying and applying psychological science to create smarter workplaces. I/O systematically examines questions like the one asked by this Quora user, in order to provide recommendations to organizations around the world. This science is conducted by a combination of academic and organizational researchers, working in concert to help organizations and their employees. It thus includes far more perspective than internal studies within an international technology corporation can provide, which is important to remember here.

Current research in I/O, conducted by scores of researchers across many organizations and academic departments all working together, in fact contradicts several of the points made by both Google and the ex-Googler. Let me walk you through a few, starting with that longer quote above.

“It’s a complete random mess” makes for a great out-of-context snippet, but the reality is much more complex than that, for two reasons.

First, unstructured interviews – those interviews where random interviews ask whatever random questions they want to ask – are in fact terrible. If your organization uses these, it should stop right now. But structured interviews, in which every applicant receives the same questions as every other applicant, fare far better. There are many reasons that unstructured interviews are terrible, but they generally involve realizing that interviewers – and people in general – are highly biased. When we can ask any question we want to evaluate someone, we ask questions that get us the outcome we want. If we like the person, we ask easy, friendly questions. If we don’t like the person, we ask difficult, intimidating questions. That reduces the effectiveness of interviews dramatically. But standardizing the questions helps fix this problem.

Second, we’re talking about Google. By the time that Google interviews a job applicant, they have already been through several rounds of evaluations: test screens, resume screens, educational screens, and so on. Google does not interview every candidate that walks through the front door. If you’re running a small business, are you getting a steady stream of Stanford grads with 4.0 GPAs? I doubt you are, and if you aren’t, interviews might still help your selection system. Google’s advice is not good advice to follow.

Some interviewers are harsher graders than others. A hiring committee can see that your 3.2 was a very good score from the guy who gives averages of a 2.3 score, but a mediocre score from the guy who gives averages of a 3.0 average. The study likely doesn’t take this into account.

That’s true; however, it doesn’t change anything. Reducing the impact of inter-interviewer differences is part of standardization. In an organization as large as Google, standardizing your interviewers completely is impossible. In your business, where you’re hiring less than 100 people per year, you can have the same two interviewers talk to every person that gets an interview. Because you’re not Google, you don’t face this problem.

The interview score is merely a quantitative measure of your performance, but it’s not the whole picture of your performance. It’s possible that your qualitative interview performance (you know, all those words your interviewer says about you) is quite correlated with job performance, but the quantitative measure is less correlated. The people actually deciding to hire you or not are judging you based on the qualitative measure.

For the reasons I outline above, people are terrible at making judgments. But translating those judgments into numbers actually helps people be more fair. When judging “qualitative performance”, an interviewer is more likely to convince him/herself of things that just aren’t true. “This candidate didn’t answer the questions very well, but I just have a good feeling about him.” “This candidate had all the right answers, but there was something I just didn’t like.” Numbers are your friends. Don’t be afraid.

People who are hired basically all have average scores in a very small range. I would suspect 90% of hired candidates have between a 3.1 to 3.3 average. Thus, when you say there’s no correlation between interview performance and job performance, we’re really saying that a 3.1 candidate doesn’t do much worse in their job than a 3.3 candidate. That’s a tiny range.

One of two problems here. One, this might be a problem with the system used to determine interview scores, not interviews in general. There are a variety of techniques to improve judgment related to numeric scales. One approach is anchoring – giving specifically behavioral examples related to each point on the scale. For example, the interview designer might assign the behavior “Made poor eye contact” to 3 so that everyone has the same general idea of what a “3” looks like. If your scale is bad, the scores from that scale won’t be predictive of performance.

Two, this may be a symptom of the same “Google problem” I described before. People that make it to the interview stage at Google are already highly qualified. Maybe Google really doesn’t need interviews. But that doesn’t mean interviews are useless for everyone.

Ultimately, it’s almost impossible to truly understand if there’s a correlation between interview performance and job performance. No company is willing to hire at random to test this.

I’ll try to avoid getting too technical here, but 1) hiring at random is not necessary to make such a conclusion and 2) this is a red herring. In no single organization do we need to answer, “do interviews predict performance in general, regardless of context?” Instead, we only need to know if interviews add more to the hiring process versus other parts of the hiring system currently in use. For example, right now, you use resume screens, interviews, and some personality tests. With the right data collected, you can easily determine how well these parts work together (or don’t). That’s what organizations need to know, and Google’s hiring practices don’t help you answer that question for your organization.

Your organization is not Google. The problems you face, and the appropriate solutions to those problems, are not the same as Google’s. Although Google’s stories are interesting, interesting stories are not something on which to build your employee selection system. You need science for a smarter workplace.

The key to all of this is that you need to make the decisions that are right for your organization. That involves conducting research within your own organization to answer the questions you find important and meaningful. If you are in charge of or have influence over human resources at your organization, you have the power to answer such questions yourself. You can develop, deploy and test a new hiring system yourself, and that’s the only way to “really know.” Don’t take hiring advice from Google, because Google’s success or lack of success using interviews, or anything else, often has little to do with how successful it will be for you.