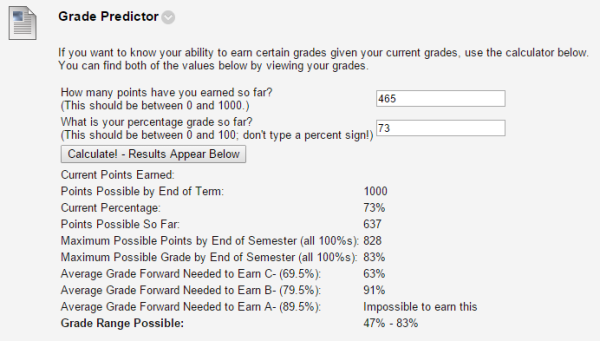

If you teach college classes like I do, around this time of year you begin to get an astonishing number of emails of the “Can I pass?” and “What do I need to get some arbitrary grade I’ve decided is a worthwhile goal?” variety. Although I’m happy to help these students feel better about their potential to succeed over the rest of the semester (or help them understand that they have waited way too long to start worrying about it now), it takes several minutes per student to run the calculations.

This semester, I realized that simply writing the code so that students could run these calculations themselves in Blackboard would take only about as much time as answering four or five such emails. So that’s what I did.

For this tool to be valuable, you will need a grading system based upon points. If you have designed your course so that everything is graded on a 100% scale and weighted by importance, this code (as written) won’t help.

In addition to Blackboard, you should be able to use this code in any LMS that supports Javascript written by the instructor. It definitely works on its own webpage, and it probably works in Canvas and others, but I don’t have access to anything other than Blackboard, so I have no way to test it. If you do get it working in another LMS, let me know!

Update: Code confirmed to work in both D2L and Moodle!

To use this code yourself:

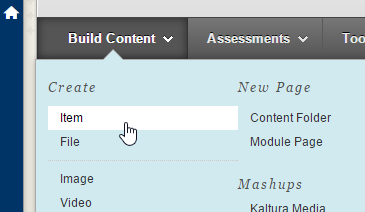

- Create a new Item in Blackboard wherever you want the Predictor to appear. Name it something meaningful (like “Grade Predictor”).

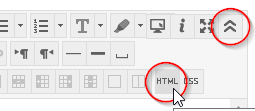

- Click on the HTML button in the item editor to pull up the raw code behind the item post. If you don’t see the HTML button, you might need to click the chevron button at the top right to display the advanced item editing tools (both circled in red below).

- A window will pop up. Copy/paste the code found by clicking this link into that window.

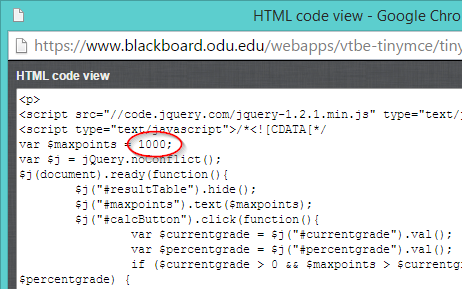

- Before you close that window, update the point total associated with “maxpoints” with the total number of points available in your course. In the example below, there are 1000 total points in the course. Change this number to whatever you want, but importantly, DO NOT CHANGE ANYTHING ELSE. Be very careful not to remove the semicolon; bad things will happen if you do.

- Click Update and then Submit to save your new Item. If all went well, you will see the predictor as a new entry in Blackboard. Try it out!

- To ensure that students have enough information about their grades to use the tool, I recommend you have a Current Point Total column and a Current Percentage Grade column in Blackboard. This is easiest to manage if you use two Calculated Columns to maintain current grade estimates. Alternatively, you could force students to calculate their point totals and percentages on their own, but that’s just mean!

The Society for Industrial and Organizational Psychology is the primary organizational affiliation for industrial/organizational psychologists, and its annual conference has a substantial impact each year on the thinking and networking of the field. So have you ever wondered who the most prolific presenters are each year?

Well I did. And not just because I thought I’d be on it! That’s very cynical of you! (Wait, was that out loud?)

This year, 2724 different people appear on the SIOP program. It’s huge! Who are the biggest influencers? The Top 20 appear below (because who can resist a listicle?), which are really 21, because there was an 8-way tie for 14th! If you don’t appear on the list, never fear – you can find your precise ranking and value as a human being by taking a look at the original data here.

And if it’s not blatantly obvious by now, I think judging yourself on rankings is a little silly. This is just for fun!

| Rank | Count | Name | Affiliation | More Info |

| 1st | 14 | Salas, Eduardo | University of Central Florida | His expertise includes helping organizations on how to foster teamwork, design and implement team training strategies, facilitate training effectiveness, manage decision making under stress, develop performance measurement tools, and design learning environments. |

| 2nd | 13 | Ryan, Ann Marie | Michigan State University | Her major research interests involve improving the quality and fairness of employee selection methods, and topics related to diversity and justice in the workplace. |

| 3rd | 11 | Boyce, Anthony S | Aon Hewitt, Inc. | In my research and development capacity, I have led the creation of more accurate, efficient, engaging, and globally relevant selection and assessment processes by using computerized adaptive testing methods, web-based job simulations, and media-rich item types. |

| 4th | 10 | Joseph, Dana | University of Central Florida | Her research interests include emotions in the workplace, employee engagement, workplace deviance, and time and research methods. |

| 10 | Wang, Mo | University of Florida | He specializes in research areas of retirement and older worker employment, expatriate and newcomer adjustment, occupational health psychology, leadership and team processes, and advanced quantitative methodologies. | |

| 6th | 9 | Carter, Nathan T | University of Georgia | My main area of research involves understanding the use of psychological measures in organizational settings. |

| 9 | Dalal, Dev K | University of Connecticut | His research interests include judgment and decision making, applications of measurement, item response theory, structural equation modeling, and research methods and design. | |

| 9 | Hebl, Michelle (Mikki) | Rice University | My research focuses on issues related to diversity and discrimination. I am particularly interested in examining subtle ways in which discrimination is displayed, and how such displays might be remediated by individuals and/or organizations. | |

| 8 | Allen, Tammy D | University of South Florida | Her research centers on employee career development and employee well-being at both work and home. Specific interests include work-family issues, career development, mentoring relationships, organizational citizenship, mindfulness, and occupational health. (Ed. I also hear she’s tam-tastic!) | |

| 9th | 8 | Burke, Shawn | University of Central Florida | C. Shawn Burke’s expertise includes teams and their leadership, team adaptability, team training, measurement, evaluation, and team effectiveness. |

| 8 | Cucina, Jeffrey M | US Customs and Border Protection | The man, the myth. (Ed. He didn’t really write that. He’s missing from the Internet! Like a ghost!) | |

| 8 | Kozlowski, Steve W | Michigan State University | My primary research interests focus on the processes by which individuals, teams, and organizations learn, develop, and adapt. | |

| 8 | Oswald, Fred | Rice University | His research and grants deal with personnel selection and testing in corporate, military and educational environments; more specifically, his wide array of publications and grants deal with the issues and findings related to developing, implementing, analyzing, interpreting and meta=analyzing various measures of individual differences (e.g., personality, ability, biodata, situational judgment). | |

| 14th | 7 | Behrend, Tara S | The George Washington University | Her research interests center around understanding and resolving barriers to computer-mediated work effectiveness, especially in the areas of training, recruitment, and selection. |

| 7 | Dahling, Jason | The College of New Jersey | His research and teaching interests focus on applications of self-regulation research to understanding feedback processes, employee deviance, career development, and emotion management in the workplace. | |

| 7 | Fink, Alexis A | Intel Corporation | She is currently leading the Talent Intelligence and Analytics team at Intel. Her team delivers insight that drives business results, including external talent marketplace analytics, and research across leadership, management and employee audiences. | |

| 7 | Hoffman, Brian J | University of Georgia | My primary research interest revolves around the person-perception domain and its application to the assessment of human performance. | |

| 7 | King, Eden B | George Mason University | Dr. King is pursuing a program of research that seeks to guide the equitable and effective management of diverse organizations. Her research integrates organizational and social psychological theories in conceptualizing social stigma and the work-life interface. | |

| 7 | Kuncel, Nathan R | University of Minnesota, Twin Cities | His specialities include the structure and prediction of academic and work performance and the predictive validity of standardized tests and non-cognitive predictors. | |

| 7 | Landers, Richard N | Old Dominion University | His research program focuses upon improving the use of Internet technologies in talent management, especially the measurement of knowledge, skills and abilities, the selection of employees using innovative technologies, and learning conducted via the Internet. | |

| 7 | Martinez, Larry R | Pennsylvania State University | He specializes in stigmatization, prejudice, and discrimination across the spectrum of employment experiences (e.g., hiring, interviewing, conflict, turnover, climate, attitudes), particularly from the target’s perspective and the role of nonstigmatized allies in reducing discrimination. |

![]() As I described in my last post, gamification is often misused and abused, applied in ways and in situations where it is unlikely to do much good. When we deploy new learning technologies, the ultimate goal of that change should always be clear, first and foremost. So how do you actually go about setting that sort of goal? In an upcoming issue of Simulation & Gaming, Landers and Landers1 experimentally evaluate the Theory of Gamified Learning to explore not only how leaderboards can be used to improve learning but also to demonstrate the decision-making process that you should engage in before trying them out. This is important, because leaderboards are one of the more contested tools in the gamification toolkit.

As I described in my last post, gamification is often misused and abused, applied in ways and in situations where it is unlikely to do much good. When we deploy new learning technologies, the ultimate goal of that change should always be clear, first and foremost. So how do you actually go about setting that sort of goal? In an upcoming issue of Simulation & Gaming, Landers and Landers1 experimentally evaluate the Theory of Gamified Learning to explore not only how leaderboards can be used to improve learning but also to demonstrate the decision-making process that you should engage in before trying them out. This is important, because leaderboards are one of the more contested tools in the gamification toolkit.

On one hand, they are one of the oldest and still most common forms of gamification across contexts, including the learning context. If you’re over about 25, you probably remember a high school teacher or college professor posting grades outside the classroom with your name and your grade. That’s a leaderboard. We’ve been using them to motivate students for a very long time, although the way we use them has been changing recently.

On the other hand, leaderboards are often considered by gamification practitioners with the term PBL: points, badges, and leaderboards. PBL is often used disparagingly to refer to the most overused of all tools in the gamification toolkit. They are overused because they are the easiest to implement; PBL can be applied just about any situation, even if there’s not really a good reason to do so.

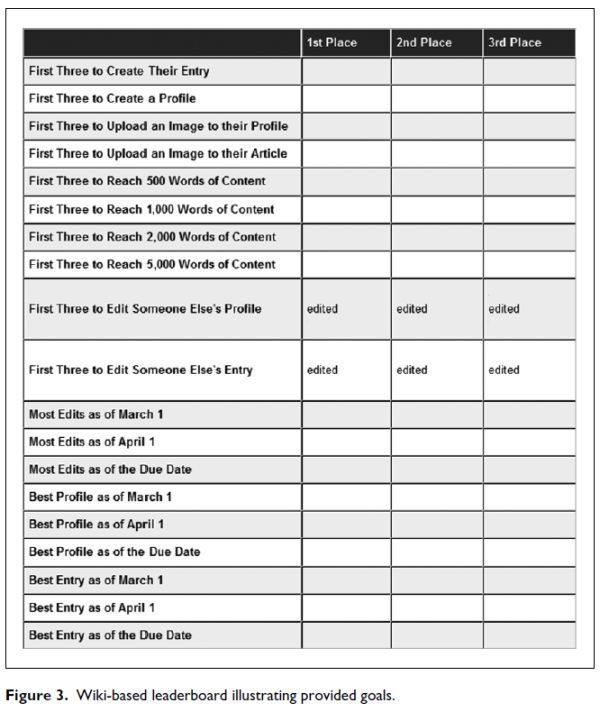

In their paper, the researchers followed the story of a course with a problem that gamification was used to solve. In this course, a semester-long project was assigned on a wiki. The goal of this project was for students to conduct independent research on an assigned topic and write a wiki article about their topic. The hope of the instructor for this project was that students would engage with the wiki project throughout the semester, learning along the way. Unfortunately, as any course instructor knows, students tend to procrastinate. In looking at usage data from past semester, the instructor realized that most of the class would only work on the project the week before it was due.

Given this, the instructor turned to the theory of gamified learning to identify what type of gamification would be best to increase the amount of time students spent working on their project. Leaderboards were chosen because they were persistent throughout the entire semester and could be used to provide very clear, well-defined goals related to the amount of time spent working on the project.

If you’ve taken a look at theory of gamified learning, you’ll recognize this as a gamification effort that can increase a learner behavior or change a learner attitude that we already know is important to learning. We already know that spending more time on an assignment is good for learning! That means the next step is to find a gamification technique that will increase that amount of time.

To test if this actually happened, the researchers randomly split the course in half and assigned the halves to either a wiki gamified with a leaderboard or a wiki without a leaderboard. This experimental design was important in order to conclude that the leaderboard actually caused changes in learning. The results of this study revealed that this approach worked just as expected. In statistical terms, the amount of time spent on the project mediated the relationship between gamification and project scores. On average, students experiencing leaderboards made 29.61 more edits to their project than those without leaderboards.

In actually implementing the leaderboard, two sets of decisions were key. First, all entries on the leaderboard were specifically targeted at increasing the amount of time spent on the leaderboard, which was the focal behavior chosen before the project began. For example, several leaderboard items tracked who had edited their entry the most times as of multiple time points in the course – after one month, after two months, and by the end of the course.

Second, the leaderboard was optional. Key to all gamification efforts is that participants must feel that they have a choice to participate. Just like organizational citizenship behaviors, once you require participation in a game, it’s no longer a game. The same applies to gamification. None of the tasks on the leaderboard were explicitly required to earn a high grade; instead, they simply encouraged students to focus their attention and return frequently to their project, as shown below.

It’s important to be very clear that this project does not demonstrate that leaderboards always benefit learning. Instead, leaderboards are just one example of the many tools available to gamification designers that can be used depending upon the specific goal of gamification. This time it was leaderboards; next time, it may be action language or game fiction. The key takeaway is that you need to decide exactly what you’re trying to change before you dive into choosing your game elements, and then choose an element to meet your instructional goals.

- Landers, R., & Landers, A. (2015). An Empirical Test of the Theory of Gamified Learning: The Effect of Leaderboards on Time-on-Task and Academic Performance Simulation & Gaming DOI: 10.1177/1046878114563662 [↩]