The logo for the Society for Industrial and Organizational Psychology. The swoosh indicates change, probably?

So, what’s the difference between industrial and organizational psychology?

The difference these days is quite fuzzy, but it used to be much clearer. Let me tell you a little story.

In the old and ancient times for the field of psychology – which of course means the end of the 19th and first half of the 20th century – there was only one field: industrial psychology. It did not always formally have this name (e.g., people calling themselves “industrial psychologists” were often found in “counseling psychology” or “applied psychology” organizations), but it was what now think of as historical “industrial psychology.” Industrial psychology was for the most part (although not entirely) focused on improving production in manufacturing and other manual labor sorts of jobs, as well as improving soldier performance on the battlefield (which at that time was also often manual labor).

In manufacturing, managers noticed that employees seemed to work harder sometimes and less hard other times, and they were not sure why. A bunch of researchers with names you’ll recognize if you have studied I/O – Hugo Munsterberg, Walter Dill Scott, James Cattell, and Edward Titchener in particular – promoted the idea that the fledgling field of psychology might be able to shed some light on this. They would of course believe this as they were all students of Wilhelm Wundt, the grandfather of modern psychology.

The growth of industrial psychology was also heavily influenced by and contributed to a movement in the early 1900s called Taylorism, reflecting the viewpoint of Frederick Taylor, a mechanical engineer by training who was inspired by Munsterberg and others. His view was that the American worker was slow, stupid, and unwilling to do any work except by force or threat. However, he also viewed science as the only way to fix the problem he perceived. The popularity of Taylorism (sometimes called “scientific management”) in the US and around the world (Stalin reportedly loved the idea) paved the way for our field to grow, for better or worse.

As a result, industrial psychology at that time had a lot of overlap with what we now call “human factors psychology.” Studies were often conducted like the famous ones by Elton Mayo at the Hawthorne plant of Western Electric, where key elements of the environment – such as lighting – were varied systematically and the effects on worker behavior observed using the scientific method. In fact, if you poke into the history of specific I/O graduate programs, you’ll often find a split between I/O and HF somewhere in their past. The goal of many studies of that era could be described as trying to trick the worker into working harder. The interesting thing about such techniques: they do work… at least to a certain degree.

In addition to trying to change performance while people were at work, other industrial psychologists became interested in hiring. Specifically, many believed that if they could design the “perfect test,” they could find the absolute most productive workers for these businesses. These tests were typically intended to be assessments of intelligence, early versions of what we now conceptualize as latent “general cognitive ability.” One of the earliest and most well known examples of these efforts were the Army Alpha and Army Beta, tests used by the US Army in World War I given to more than a million soldiers for the purposes of assessing readiness to become a soldier, place them into specific military positions, and also – the first hint of a later shift – to identify high-potential leaders. These tests are early versions of the current test, which is still maintained and studied by I/O psychologists: the ASVAB.

As industrial psychology grew, so did the feeling that our field was missing something. The Hawthorne studies I referenced earlier are often credited as being the trigger point for this, but Hawthorne better serves as an example of this shift rather than thecause. As early as the 1930s, people became aware that industrial psychology’s focus on predicting and improving performance often ignored other aspects of the worker, specifically those involving people’s feelings. Motivation, satisfaction, how people get along with others – these topics were not of much concern among industrial psychologists, and a number of studies, including those at Hawthorne, increased interest in the application of psychology to the broader workplace. They also wondered if performance could be increased further by looking beyond hiring and worker manipulation – perhaps there is more we could do?

Thus, in 1937, the first organization devoted to I/O was created: Section D of the American Association for Applied Psychology, Industrial and Business. The AAAP merged into the American Psychological Association in 1945, rebirthing our field as APA Division 14: Industrial and Business Psychology. The shift from “Business” to “Organization” reflected changing priorities over several decades. Dissatisfaction with the explicit ties to Business (and not, for example, the military, government, etc.) resulted in the division being renamed simply “Industrial Psychology” in 1962. With the shift away from an industrial economy in the 1960s, dissatisfaction with the term “Industrial” led to the name we have today as of 1973: Industrial and Organizational Psychology.

So the short version of this answer is that: the distinction between industrial and organizational psychology these days is not a particularly strong one. It is instead based on historical shifts in priorities among the founding and early members of the professional organizations in our field. If I had to split them, I’d say people on the industrial side tend to focus more on things like employee selection, training and development, performance assessment and appraisal, and legal issues associated with all of those. People on the organizational side tend to focus more on things like motivation, teamwork, and leadership. But even with that distinction, people on both sides tend to borrow liberally from the other.

There was also a historical association of industrial psychology with more rigorous experimentation and statistics, largely because the focus on hiring could only be improved with those methods. The topics common to org psych were much broader with much more unexplored territory for a lot longer. But that has changed too – there aren’t many org psych papers published anymore without multilevel or structural equation modeling, as contributions on both the I and O sides have become smaller and more incremental than in the past. The old days of I/O were practically a Wild West! You could essentially just go into an organization, change something systematically, write it up, and you’d have added to knowledge. These days, it’s a lot harder.

Behind the scenes of all these theoretical/stance changes was also a huge ongoing battle against the American Psychological Association with where our field should fit as a professional organization (did you ever think it strange that SIOP incorporated as a non-profit while still a part of APA?), a problem that continues to this day. But that’s a different story!

Sources:

A Brief History of the Society for Industrial and Organizational Psychology, Inc.-

Update of Landy’s I-O Trees

A Brief History of Industrial Psychology

Frederick W. Taylor, father of scientific management : Copley, Frank Barkley : Free Download & Streaming : Internet Archive

History of Industrial and Organizational Psychology – Oxford Handbooks

SIOP Timeline

“The Difference Between Industrial and Organizational Psychology” originally appeared in an answer I wrote on Quora.

![]() Just a few days ago, the new and very promising open-access I/O psychology journal Personnel Assessment and Decisions released its second issue. And it’s full of interesting work, just as I thought it would be. This issue, in fact, is so full of interesting papers that I’ve decided to review/report on a few of them. The first of these, a paper by Nolan, Carter and Dalal1, seeks to understand a very common problem in practice: despite overwhelmingly positive and convincing research supporting various I/O psychology practices, hiring managers often resist actually implementing them. A commonly discussed concern from these managers is that they will be recognized less for their work, or perhaps even be replaced, as a result of new technologies being introduced into the hiring process. But this evidence, to date, has been largely anecdotal. Does this really happen? And do managers really resist I/O practices the way many I/Os intuitively believe they do? The short answer suggested by this paper: yes and yes!

Just a few days ago, the new and very promising open-access I/O psychology journal Personnel Assessment and Decisions released its second issue. And it’s full of interesting work, just as I thought it would be. This issue, in fact, is so full of interesting papers that I’ve decided to review/report on a few of them. The first of these, a paper by Nolan, Carter and Dalal1, seeks to understand a very common problem in practice: despite overwhelmingly positive and convincing research supporting various I/O psychology practices, hiring managers often resist actually implementing them. A commonly discussed concern from these managers is that they will be recognized less for their work, or perhaps even be replaced, as a result of new technologies being introduced into the hiring process. But this evidence, to date, has been largely anecdotal. Does this really happen? And do managers really resist I/O practices the way many I/Os intuitively believe they do? The short answer suggested by this paper: yes and yes!

In a pair of studies, Nolan and colleagues explore each of these questions specifically in the context of structured interviews. In this case, “structure” is the technology that threatens these hiring managers. But do managers that use structured interviews really get less credit? And are practitioner fears about this really associated with reduced intentions to adopt new practices, despite their evident value?

In the first study, 468 MTurk workers across 35 occupations were sampled. Each was randomly assigned to a 2 x 2 x 3 matrix of condition, crossing interview structure (yes/high or no), decision structure (mechanical or intuitive combination), and outcome (successful, unsuccessful, unknown). Each participant then read a standard introduction:

Imagine yourself in the following situation…The human resource (HR) manager at your company just hired a new employee to fill an open position. Please read the description of how this decision was made and answer the questions that follow.

After that prompt, the descriptions varied by condition (one of 12 descriptions followed), but were consistent by variable. Specifically, “high interview structure” always indicated the same block of text, regardless of the other conditions. This was done to carefully standardize the experience. Afterward, participants were asked a) if they believed the hiring manager had control over and was the cause of the hiring decision and b) if the decision was consistent (a sample question for this scale: “Using this approach, the same candidate would always be hired regardless of the person who was making the hiring decision.” I/Os familiar with applicant reactions theory may recognize this as a perceived procedural justice rule.

So what happened? First, outcome didn’t matter much for either outcome. Regardless of the actual decision made, outcome and all of its interactions accounted for 1.9% and 3.3% of the total variance in each DV. Of course, in some areas of I/O, 3.3% of the variance is enough to justify something as extreme as theory revision, but in the reactions context, this is a pitifully small effect. So the researchers did not consider it further.

Second, the interview structure and decision structure manipulations created huge main effects. 14% of causality/control’s variance was explained by each manipulation. The total model accounted for 27%, which is a huge effect! For stability, the effect was smaller but still present – 9% and 7% for each manipulation respectively, and 17% for the full model. People perceived managers as having less influence on the process as a result of either type of structure, and because the interactions did not add much predictive power to the model, these effects were essentially independent.

One issue with this study is that these are “paper people.” Decisions about and reactions to paper people can be good indicators of the same situations when involving real people, but there’s an inferential leap required. So if you don’t believe people would react the same way to paper people as real people, then perhaps the results of this study are not generalizable. My suspicion is that the use of paper people may strengthen the effect. So real-world effects are probably a little smaller than this – but at 27% of the variance explained (roughly equivalent to r = .51), there’s a long way down before the effect would disappear. So I’m pretty confident it exists, at the least.

Ok – so people really do seem to judge hiring managers negatively for adopting interview structure. But does that influence hiring manager behavior? Do they really fear being replaced?

In the second study, MTurk was used again, but this time anyone who had no experience with hiring was screened out of the final sample. This resulted in 150 people with such experience, 70% of which were currently in a position involving supervision of direct reports. Thus, people with current or former hiring responsibilities participated in this second part. People who could be realistically replaced by I/O technology.

The design was a bit different. Seeing no results for outcome type, this block was eliminated from their research design, crossing only interview and decision structure (a 2 x 2, only 4 conditions). Perceptions of causality/control and consistency were assessed again. But additionally, perceived threat of unemployment by technology was examined (“Consistently using this approach to make hiring decisions would lessen others’ beliefs about the value I provide to my employing organization.”) as well as intentions to use (“I would use this approach to make the hiring decision.”)

The short version: this time, it was personal. The survey asked if the hiring managers used these techniques, what would happen? Could I be replaced?

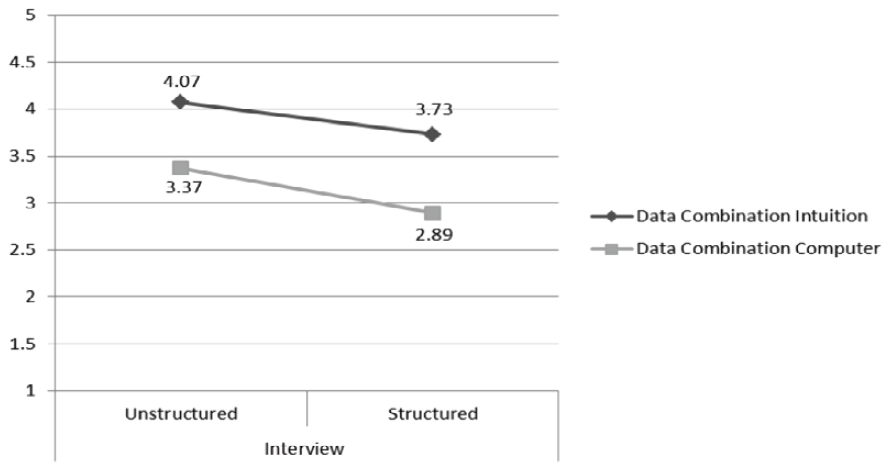

As you might expect in a move to more real-world processes, the effects were a bit smaller this time, but still quite large: 22% of causality/control explained, and 9% of consistency. You can see the effect on causality in this figure.

Nolan et al, 2016, Figure 3. Perceptions of causality/control on conditions.

Nolan et al, 2016, Figure 3. Perceptions of causality/control on conditions.

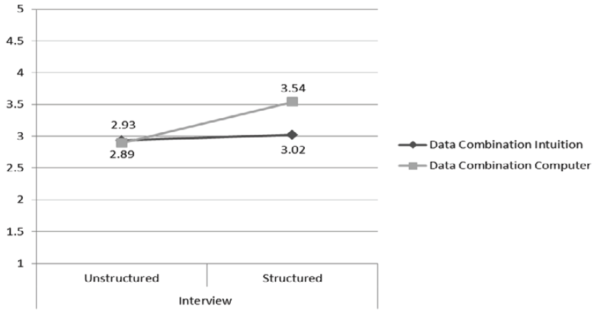

Interestingly, the consistency effect turned into an interaction: apparently having both kinds of structure is worse than just one.

Nolan et al, 2016, Figure 4. Perceptions of consistency on conditions.

Nolan and colleagues also tested a path model confirming what we all expected:

- Managers who believe others will judge them negatively for using new technologies are less likely to use those technologies.

- This effect occurs indirectly via perceived threat of unemployment by technology.

In summary, some people responsible for hiring fear being replaced by technology, and this decreases their willingness to adopt those technologies. This explains cases I/Os often hear about implementing a new practice in an organization only to discover that nothing has changed because the managers never actually changed anything. In an era where robots are replacing many entry level jobs, this is a legitimate concern!

The key, I think, is to design systems for practice that take advantage of human input. There are many things that algorithms and robots can’t do well (yet) – like making ratings for structured interviews! Emphasizing this and ensuring it is understood up and down the chain of command could reduce this effect.

So for now, we know that being replaced by our products is something managers worry about. This suggests that simply selling a structured interview to a client and leaving it at that is probably not the best approach. Meet with managers, meet with their supervisors, and explain why people are still critical to the process. Only with that can you have some confidence that your structured interview will actually be used!

Always remember the human component to organizations, especially when adding new technology to a process that didn’t have it before. People make the place!

- K.P. Nolan, N.T. Carter, & D.K. Dalal (2016). Threat of technological unemployment: Are hiring managers discounted for using standardized employee selection practices? Personnel Assessment and Decisions, 2 (1) [↩]

![]() One of the biggest challenges for psychologists is gaining access to research participants. We go to great lengths, from elaborate and creative sampling strategies to spending hard-earned grant money, to get random(ish) people to complete our surveys and experiments. Despite all of this effort, we often lack perhaps the most important variable of all: actual, observable behavior. Psychology is fundamentally about predicting what people do and experience, yet most of our research starts and stops with how people think and feel.

One of the biggest challenges for psychologists is gaining access to research participants. We go to great lengths, from elaborate and creative sampling strategies to spending hard-earned grant money, to get random(ish) people to complete our surveys and experiments. Despite all of this effort, we often lack perhaps the most important variable of all: actual, observable behavior. Psychology is fundamentally about predicting what people do and experience, yet most of our research starts and stops with how people think and feel.

That’s not all bad, of course. There are many insights to be gained from how people talk about how they think and feel. But the Internet has opened up a grand new opportunity to actually observed what people do – to observe their Internet behaviors. We can actually see how they communicate with others on social media, tracking their progress in relationships and social interactions in real time, as they are built.

Despite all of this potential, surprisingly few psychologists actually do this sort of research. Part of the problem is that psychologists are simply not trained on what the Internet is or how it works. As a result, research studies involving internet behaviors typically involve qualitative coding, a process by which a team of undergraduate or graduate students will read individual posts on discussion boards, writing down a number or set of numbers for each thing they read. It is tedious and slow, which slows down research for some and makes the entire area too time-consuming to even consider for others.

Fortunately, the field of data science has produced a solution to this problem called internet scraping. Internet scraping (also “web scraping”) involves creating a computer algorithm that automatically travels across the Internet or a select piece of it, collecting data of the type you’re looking for and depositing it into a dataset. This can be just about anything you want but typically involves posts or activity logs or other metadata from social media.

In a paper recently published at Psychological Methods by me and my research team1, our goal is to teach psychologists how to use these tools to create and analyze their own datasets. We do this in a programming language called Python.

Now if you’re a psychologist and this sounds a little intimidating, I totally understand. Programming is not something most research psychologists learned in graduate school, although it is becoming increasingly common.

R, a statistical programming language, is increasingly becoming a standard part of graduate statistical training, so if you’ve learned any programming, that’s probably the kind you learned. If you’re one of these folks, you’re lucky – if you learned R successfully, you can definitely learn Python. If you haven’t learned R, then don’t worry – the level of programming expertise you need to successfully conduct an Internet scraping project is not as bad as you’d think – similar to the level of expertise you’d need to develop to successfully use structural equation modeling, or hierarchical linear modeling, or any other advanced statistical technique. The difference is simply that now you need to learn technical skills to employ a methodological technique. But I promise it’s worth it.

In our paper, we demonstrated this by investigating a relatively difficult question to answer: are there gender differences in the way that people use social coping to self-treat depression? This is a difficult question to assess with surveys because you always see reality through the eyes of people who are depressed. But on the Internet, we have access to huge databases of people with depression and trying to self-treat in the form of online discussion forums intended for people with depression. So to investigate our demonstration research question, we collected over 100,000 examples of people engaging in social coping, as well as self-reported gender from their profiles. We wanted to know if women use social coping more often than men, if women are more likely to try to support women, if men are more likely to try to support men, and a few other questions.

The total time to collect those 100,000 cases? About 20 hours, during a solid 8 hours of which I was sleeping. One of the advantages to internet scraping algorithms collecting your data is that they don’t require much attention.

Imagine the research questions we could address if more people adopted this technique! I can hear the research barriers shattering already.

So I have you convinced, right? You want to learn internet scraping in Python but don’t know where to start? Fortunately, this is where my paper comes in. In the Psychological Methods article, which you can download here with a free ResearchGate account, we have laid out everything you need to know to start web scraping, including the technical know-how, the practical concerns, and even the ethics of it. All the software you need is free to use, and I have even created an online tutorial that will take you step by step through learning how to program a web scraper in Python! Give it a try and share what you’ve scraped!