The annual meeting of the American Psychological Association has moved fully online this year, and it’s actually pretty good – the online platform is easy to search and use, making finding interesting content very straightforward. They have a live program running for 3 days that you can tune into, plus virtual content that will be available for about a month. All pretty cheap, as low as $15 for student APA members to attend.

The virtual content includes a full program put together by SIOP: 1 invited address, 5 symposia, 1 discussion (panel), and 34 posters. Much of this content is in video, which personally I believe is necessary for a decent conference experience! So given that, I wanted to promote this portion of the SIOP program. Also, I gave the invited address! So it’s definitely a little selfish too. Here’s all the content you can experience on video from Division 14 (but check out the other divisions too!):

Invited Address

- How Can Artificial Intelligence Be Used to Better Ask and Answer Research Questions? by Richard Landers

Symposia

- Careers in Undergraduate I/O Psychology by Brian Loher, Kristian Veit & Abby Mello

- Toxic Workplaces and Abusive Leaders by Sobia Nasir, Ozge Can, Adam Chittenden, Benjamin Thomas, Parent-Lamarche Annick, Fernet Claude & Stephanie Austin

- Are Generational Categories Meaningful Distinctions for Workforce Management? by Nancy Tippens, Eric Anderman, Dana Born, Brian Hoffman, Arne Kalleberg & Julia Schuck

- Engagement in the Workplace by Zorana Ivcevic, Julia Moeller, Zehabit Levitats, Eran Vigaoda-Gadot, Dana Vashdi, Vignesh Murugavel, Roni Reiter-Palmon, Joesph Allen, Isaac Lundquist, William Kramer & Crista Taylor

- Legal Updates for I/O Psychologists: Analyzing Recent Supreme Court Cases and Workplace Implications by Eli Noblitt & Kathy Rogers

Discussion/Panel

- What’s Happening at APA and SIOP? Q&A with Division 14/SIOP Council Representatives by Tammy Allen, Jeff McHenry, Patrick O’Shea & Sara Weiner

Posters

- Generation Z at Work: Difference Makers Demanding Rewarding Experiences, Challenge, and Leader Mentoring by Yvette Prior

- Closing the Gap Between Theory and Practice: Offering I/O Master-Level Internships Online by Maria Antonia Rodriguez

- Exploring Corporate Communicative Competence and Upgrading Communicative Skills in the Bilingual, Multicultural, and Digital Kazakhstan by Sholpan Kairgali

- African-American Women Government Contractors’ Experiences of Work Engagement by Rajanique Modeste

- Examining the Process of Men Successfully Mentoring Women in Nontraditional Occupations by Amy Foltz

- Do Social Class Background and Work Ethic Beliefs Influence the Use of Flexible Work Arrangements? by Anna Kallschmidt

- Work-Family Conflict and Professional Conferences: Do We Walk the Talk? by Vipanchi Mishra

- The Practice of Mindfulness, Coping Mechanisms on Mental Fatigue by Lisa Thomas

- In the Heat of the Moment: Using Personality as a Predictor of Firefighter Decision-Making by Chance Burchick

- Factors That Influence the Tenure of Direct Support Professionals in the Intellectual and Developmental Disabilities Field by Hirah Mir

UPDATE: If you missed the live event, you can still check out a recording here! This will probably only stay posted for a few weeks, so get it while you can!

If you’re in the gaming space, or if you have a kid whom you’ve watched over their shoulder while they played Minecraft, you’ve probably seen a Let’s Play.

Let’s Plays are live, narrated explorations of video games. A person plays the game but also provides a bit of think-aloud as they play, and people watch in real-time. “That was weird!” “That was cool!” “Here’s what I’m planning to do next.” It is a sort of a combination of think-aloud and voyeurism?

If you’ve ever heard of the media streaming service Twitch, it’s devoted to Let’s Play livestreams. The audience also can post text contributions to the livestream, which the streamers see and respond to (or don’t).

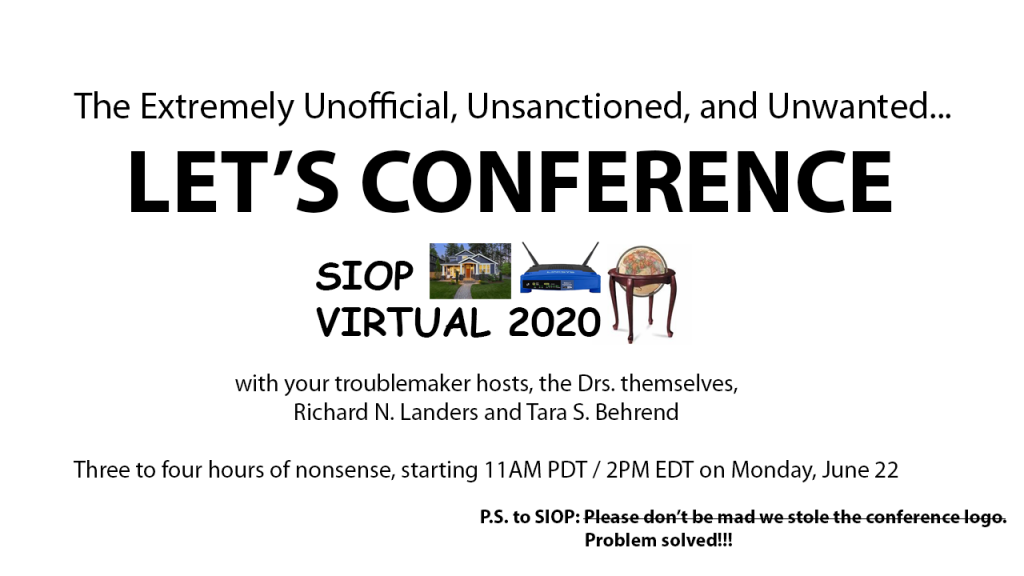

With that background, I am proud to announce that alongside Tara Behrend, we will be hosting Let’s Conference: Virtual SIOP 2020. In the event, we’ll be live-watching SIOP video sessions on Monday, June 22 from around 2PM EDT / 11AM PDT through 6PM EDT / 3PM PDT and providing commentary. Maybe. We might end early if we get tired of doing it for whatever reason, no promises.

Let me first be perfectly clear. Although Tara and I are both highly involved in the SIOP organization, this is not an official, recognized, or even clearly allowed thing we’re doing. We’ve gone rogue! ROGUE I SAY!

So what’s the schedule? That’s still being finalized, but here’s the general docket:

- The interesting parts of the plenaries.

- Random friends and other colleagues whom we are pretty sure wouldn’t mind us ripping into them but we aren’t going to ask them ahead of time just to be safe.

- GRAD STUDENT FRESH MEAT. Ok, just kidding. We will probably watch some grad students at some point by watching symposia that sound interesting, but rest assured it will be constructive and thoughtful, unlike commentary on friends whom we will be destroying just because we enjoy that sort of thing, you know who you are.

- Anyone who wants to be! If you’d like your video to be included, please comment below and we’ll try to get your session on the roster!

- Suggestions of live audience members! Because Let’s Conference will be a live experience, we will be watching audience comments and taking your advice when we feel like it.

In between sessions, we’ll be chatting about what we learned, what we didn’t, and discussing audience comments/questions.

You might ask why we’re doing this… to you I say, why not!?!

When the show goes live, you’ll find it here on YouTube Live. Here’s a tentative schedule, although there’s basically zero chance we will actually get to all of these sessions:

- Opening Plenary

- Scott Tonidandal – Conference Welcome

- Georgia Chao – Introduction to Eden King

- Eden King – Outgoing President’s Message

- SIOP Foundation Visionary Circle Presentation

- Naval Gazing Hour

- 0005: Pay Equity Analysis: Hey, Labor Economist—Anything You Can Do I-O Can Do Better! (Master Tutorial)

- 0912: SIOP Select: Revising the APA Ethics Code to Better Fit the Roles of I-O Psychologists (Special Event)

- 0446: Know Your Worth: Results From the 2019 SIOP Salary Survey (Alternative Session Type)

- Poster Break 1

- Just a Look Around

- Social Justice and Current Events Hour

- 0002c: COVID-19 – Selection & Testing

- 0137: Applying I-O to Healthcare: Opportunities for Research and Practice (Ignite)

- 0329: Where Are the Men? Bringing Men Into the Mix to Push for Workplace Gender Equality (Symposium)

- 0015: WHAT Is Inclusion? Its Past, Present, and Future (Special Event)

- Poster Break 2

- Deep Dive into Technology/Gamification/Social Media/Simulations

- Methods Hour

- 0815: Using MetaBUS to Locate, Synthesize, and Visualize I-O Research Findings (Master Tutorial)

- 0016: Life in the Fast Lane: Advances in Rapid Response Measurement (Symposium)

- 0207: Excel Can Do That Too? Five Fantastic Functions for I-Os (Ignite)

- 0868: Trust in AI (Panel Discussion)

- Poster Break 3

- Deep Dive into Testing/Assessment/Selection Methods/Validation

- Closing Plenary

Go ahead and click over now to set a reminder for yourself to watch the live show. To participate, you’ll need a computer or mobile device capable of watching YouTube. See you there!

If there’s one thing that white-collar workers around the world suddenly have in common because of COVID-19, it’s that we are very suddenly tied to our keyboards, even more so than before. And some of us were very tied to our keyboards!

Unfortunately, as you probably suspected, sitting in front of a computer keyboard for 8 hours a day is not particularly good for your health.

The bigger rules about using a computer at a desk I feel are more common knowledge. Drink lots of water. At least once an hour, stand up and walk around for at least 10 minutes, and either go outside or look out windows into the horizon. Sit with good posture.

These things seemed obvious to me. What was less obvious is what to do about my wrists. If a high proportion of your job is about writing, you’ve probably already faced this problem, although you might not have acted on it already.

Anywhere between a few days to a few years into typing 8 hours a day, you will begin to get random wrist pain. At first, it’s just a twinge every once in a while. A moment where you need to rub your wrist and think, “That never did that before.” Next, it’ll be mild throbbing but only at the end of the day. Then you’ll notice it more throughout the day until it becomes unbearable. These are all signs that you’re on the path to permanent wrist issues, and at the end of that road is surgery!

As an academic who spends many hours a day writing in front of a computer (and even more now that all of my teaching is at home too!), I know this road well. That is what led me to research what I can do to ensure my home office (and eventually my real office, in the magical future where we aren’t sheltered-in-place anymore) is designed ergonomically, to ensure my future wrist health for years to come.

If you don’t do these things, the best case is that you’ll have moderate but unnecessary wrist pain. The worst case is carpal tunnel syndrome, which is marked by tingling pain and throbbing in your fingers and wrists and eventually, surgical correction. So take steps now to set yourself on a better path.

Here are six general recommendations, some free and some not. At a minimum, you should do #1, #2 and #3 today!

- Take breaks. As I described earlier, for your general health, you should at least once an hour stand up and walk around for at least 10 minutes (to reduce the chance of blood clots or other heart-related issues), and using that time to go outside or look out windows to the horizon (to reduce eye strain). Another benefit to such breaks is that you give your wrists some time off! Let them just hang loosely and relax while you wander around – don’t hold your phone!

- Stay flexible. One of the easiest and cheapest things you can do to reduce wrist pain is to increase wrist strength through basic physical therapy that you can complete at your desk. Every day, and especially every time you feel that little wrist twinge, spend a few minutes out these wrist exercises.

- Sit at an appropriate height relative to your keyboard. Chair height is a very tricky thing to get right. In addition to sitting with good posture, you should be sitting at a height so that your hips are level or very slightly below your knees, which takes the pressure off the arteries in your legs (important for avoiding blood clots!). But it’s tricky because you should simultaneously maintain a 90-degree (right) angle or larger at the elbow, meaning that your arms should angle down, away from you. See these diagrams from Cornell. In practice, it is next-to-impossible to do this if your keyboard is on a desk. Instead, you likely need a keyboard tray that hangs below the level of your desk, like this one or this one. These trays come in a variety of types, but the main thing to consider: do you want to bolt one to your desk, or do you want one that clamps on?

- Use a vertical mouse or trackball. You might think that mice are all the same, but ergonomic mice are vertical, meaning that you hold them in a more natural angled wrist position instead of flat. Try this: place your hands loosely in your lap and notice what your wrist does – you will notice that the top of your hand angles slightly outwards. Thus, an ergonomic vertical mouse will replicate this angle to avoid wrist strain. I personally use this one by Anker but in the wired version, which has been great (and was cheap), but Logitech has its own design if you prefer a more familiar brand name (although Anker is mainstream among the tech crowd!).

In contrast to vertical mice, trackballs have always been sort of a weird cousin to mice more broadly and that’s still true today. Nevertheless, they are available in a wide variety of styles, some combined with traditional desk-mouse tracking and some not, which tend to be a bit more ergonomic (i.e., they have wrist tilt). There are even handheld versions that you can use without a desk! - Use a split keyboard with lifters and mechanical switches. Ok, so a lot of new concepts with this one. Split keyboards are keyboards where the left and half sides are split into two physical pieces, which allows you to place them wherever you want – typically slightly angled out to match the natural position of your wrists when sitting with good posture.

Lifters allow you to angle the keyboard to match your natural wrist angle horizontally, the same way a vertical mouse does.

Mechanical switches are individually actuated so that there is “give” when you press each key; the key gradually firms up as you press it further down, which gives a bit of a “bounce” to your typing and softens the impact on your fingers (one hard key impact doesn’t matter – tens of thousands of presses over the course of a day does). Mechanical switches are in contrast to a “membrane key” switch, which is what you typically find in laptops – the top of the key and bottom of the key are very close together, so every tap is a pretty hard hit.

It’s actually surprisingly difficult to find all three of these features in one keyboard at a decent price, so in order of priority, I would suggest split, then mechanical, then lifters.

If you do want to get all three, I have two suggestions. The first is the keyboard I use, the Kinesis Pro with Cherry MX Mechanical Switches, plus its matching lifters. If you instead want to dive off the deep end into the world of absolute keyboard customizability, I would suggest the ErgoDox EZ. The ErgoDox EZ was actually a kickstarted project by a guy named Ergo to create the “perfect” split, lifted, mechanical keyboard. It is intended for “power users” and even requires some time to retrain yourself to use it. It honestly seems like a bit of a cult.

One thing that surprised me when getting into ergonomic computer accessories was just how pricey they were, which is largely driven by the mechanical switches – they must each, one per key, be manufactured and installed separately, which drives the price way up, as does the two-piece form factor. So if you don’t mind sacrificing the switches and keeping it all a single unit but maintain some of the benefits of the angles, the Microsoft Sculpt has been popular lately, as has the Perixx. - Use mouse and keyboard wrist support rests. If you have proper posture and use a keyboard tray, this is less important, but I would still suggest a good set of pads. One of the worst things you can do is rest your wrist along the corner of your desk (pinching nerves and blood vessels), and shortly behind that is resting your wrist on the hard surface of your desk rather than floating in midair above your keyboard. In either case, a good wrist rest will provide insurance that you won’t accidentally rest your wrists somewhere where they’ll be pinching something important. And fortunately, rests are pretty cheap insurance!

That’s it! Good luck and happy typing in your new COVID-19 telework job!